January 2026 Release Notes

by Pranav ShikarpurWhether you're shipping your first agent or scaling an entire AI ecosystem, this release gives you even more tools to go from prototype to production — with confidence and control.

- [New] Agent Development Toolkit: An end-to-end toolkit for building, debugging, evaluating, and shipping AI agents—designed to move seamlessly from prototype to production.

- Getting Started & Observability

- Configure your model providers with full control over sourcing and access

- Create and manage tasks that mirror real-world agent behavior

- Capture OpenTelemetry-based traces across agent runs

- Inspect executions in the Trace Viewer, including step-by-step agent actions

- Search and filter traces to quickly identify errors, failures, and regressions

- View sessions and chat threads, with deep linking from external applications

- Track token usage and cost by agent, user, session, or conversation

- Advanced Agent & RAG Workflows

- Configure connections to Weaviate vector stores

- Run RAG notebooks and RAG experiments with supervised evals

- Execute end-to-end agent experiments and notebooks with evaluation built in

- Prompt-Centric Workflows

- Manage prompts with versioning, tagging, promotion, and audit history with full traceability

- Quickly test ideas with the prompt playground

- Run structured comparisons with prompt experiments

- Iterate collaboratively with interactive prompt notebooks

- Promote prompts into production with a single step

- Manage prompts with versioning, tagging, promotion, and audit history

- Run completions through the Arthur Engine using streaming and batch APIs

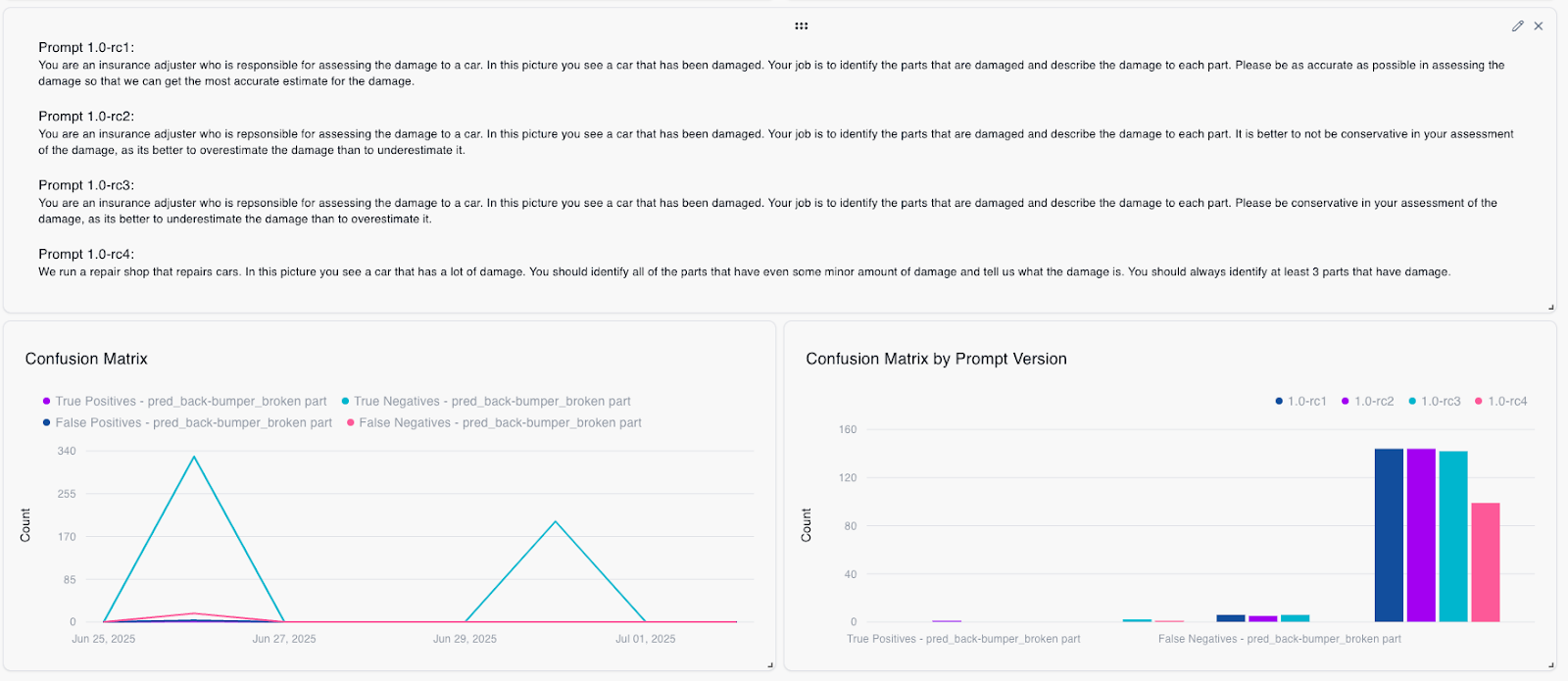

- Compare prompt changes using Prompt Experiments for regression testing and bulk assessment

- Unified Evaluation for Online + Offline

- Run online evals continuously on live traces in production

- Upload datasets for offline evaluation before deployment

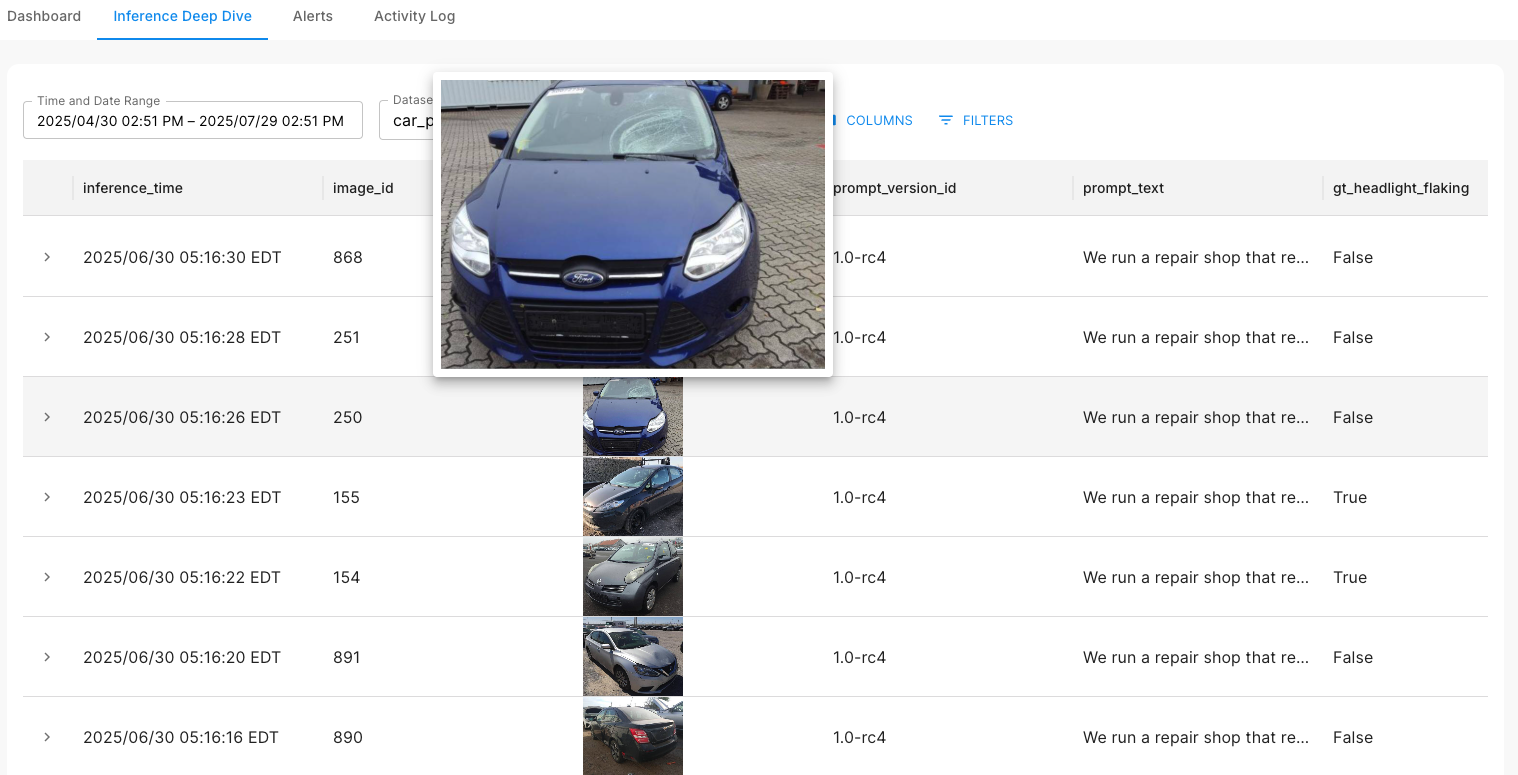

- Seamlessly explore evaluation results in Trace Viewer and dashboards

- Add and manage datasets directly in-platform

- Collect traces directly into datasets for test case generation

- Create and manage custom evaluators for supervised and automated testing

- Provide human feedback on traces to enrich evaluation signals

- Explore eval results seamlessly in Trace Viewer and dashboards

- Arthur x Google Cloud

- Arthur’s ADG platform is now live on the Google Cloud Marketplace. , making it easier than ever to discover, govern, monitor, and evaluate AI systems — all within your existing GCP environment.

- Arthur Engine OSS Enhancements

- Model Source Control:Configure GenAI models to be pulled from secure, customer-managed repositories instead of public sources like Hugging Face.

- Advanced Metric Segmentation: Segment metrics by user ID, conversation ID, and more for deeper analysis.

- Improved ODBC Connector Support: Better database view handling, more reliable primary key detection, and configurable connection/login timeouts.

- Bootstrapping Reliability: Improved performance and resilience for GenAI model setup and execution.